Deep Survival with Laurence Gonzales: Bad News: Disaster is Inevitable. Here’s How to Avoid It.

Whether on mountains, in air travel, or on Wall Street, disaster is bound to strike. It’s just part of the system. So, how to avoid it?

I’m going to tell you a mountaineering disaster story, and it may sound familiar. Risks were taken. Precautions were minimal. Mistakes were made. Conditions deteriorated rapidly. It wasn’t just one thing that caused it, but once it got going, the situation really got out of hand. It reminds me a lot of the recent global financial meltdown.

In the spring of 2000, John Miksits and Craig Hiemstra, two experienced climbers, met at the Bunny Flat trailhead, which leads to the Cascade Gulch route on Mount Shasta, in California. They had met online but had never climbed together before. The weather was gorgeous, and the pair spent their first night at Hidden Valley. The next day the wind kicked up, and they camped at 11,500 feet at Lake Sisson. The wind continued to increase, and high, thin clouds moved in. Nevertheless, they convinced themselves that there was no reason to worry and planned to leave for the summit at 1 a.m. the next day. By bedtime, the wind was blowing 35 miles an hour, but Miksits and Hiemstra still left in the predawn hours.

With sunup came whiteout conditions and 65-mile-an-hour winds. By 8 a.m. on April 12, two climbers at Lake Sisson got a radio call from Miksits. He and Hiemstra had actually made the summit and were now only 400 feet above the camp but couldn’t find it. The two climbers at camp called the weather service, but when they tried to relay the forecast to Miksits and Hiemstra, they were unable to reach them. The climbers at Lake Sisson began to descend in the blizzard, which would drop six feet of new snow. Though they repeatedly radioed Miksits, they never reached him.

The next day the search began, sweeping five helicopters into the vortex of miscalculations, along with searchers on skis and snowmobiles working the ground. Two days later, on April 15, Hiemstra’s body was found at 10,300 feet, his neck snapped from a fall he’d taken. The search for Miksits went on for another four days, at which point a National Guard Black Hawk helicopter crashed at 11,600 feet and rolled downhill. Amazingly, the pilots and passengers survived with only minor injuries. While the two climbing rangers who’d been on board continued to search through dangerous avalanche territory, the other passengers became the objects of a rescue-within-a-rescue, as they hiked down to a landing spot so that yet another Black Hawk could pick them up.

It wasn’t until Memorial Day weekend, when the snow melted, that Miksits’s body was found. Beneath him the gear was neatly laid out “in an organized fashion,” as the report on the accident said. This behavior is typical of someone suffering from hypothermia, which was listed as the official cause of Miksits’s death.

There are a number of immediately obvious observations that we can make about this accident. One is: Don’t go into harm’s way with people you don’t know. Another is that, as the accident report states, “In the high mountains, even ones we are familiar with, there is but one season: winter.” But there is a much subtler point that I’d like to make about this accident.

Like many other famous calamities, it has all the earmarks of what the sociologist Charles Perrow calls “system accidents.” In his book Normal Accidents, Perrow puts forth the theory that some systems are so complex that accidents are bound to happen. Moreover, “processes happen very fast and can’t be turned off” in these systems. “Recovery from the initial disturbance is not possible; it will spread quickly and irretrievably for at least some time,” he writes.

When Perrow first came up with this concept in the 1980s, the science of self-organizing systems was in its infancy. Self-organizing systems generally involve numerous agents (people) acting by fairly simple rules that cause the actions of one or more agents to influence what others do. For example, my partner is going to climb higher; therefore, I’m going to climb higher. Researchers who study complex systems often use markets as an example. In any type of market, all the agents can do is buy and sell. But the system as a whole produces very complex and mathematically orderly behavior, represented by fluctuations in price. The recent boom and bust in the housing and mortgage market is an example of how complex systems behave, as is the near collapse of the banking industry and the economy as a whole. Over time, markets and economies experience a great number of small fluctuations, a smaller number of medium-size fluctuations, and the rare but inevitable busts and booms. The frequency of these events is related to their size by a mathematical function known as a power law. This means, for example, that a change in price of 2 percent might be three times as likely as a change in price of 4 percent.

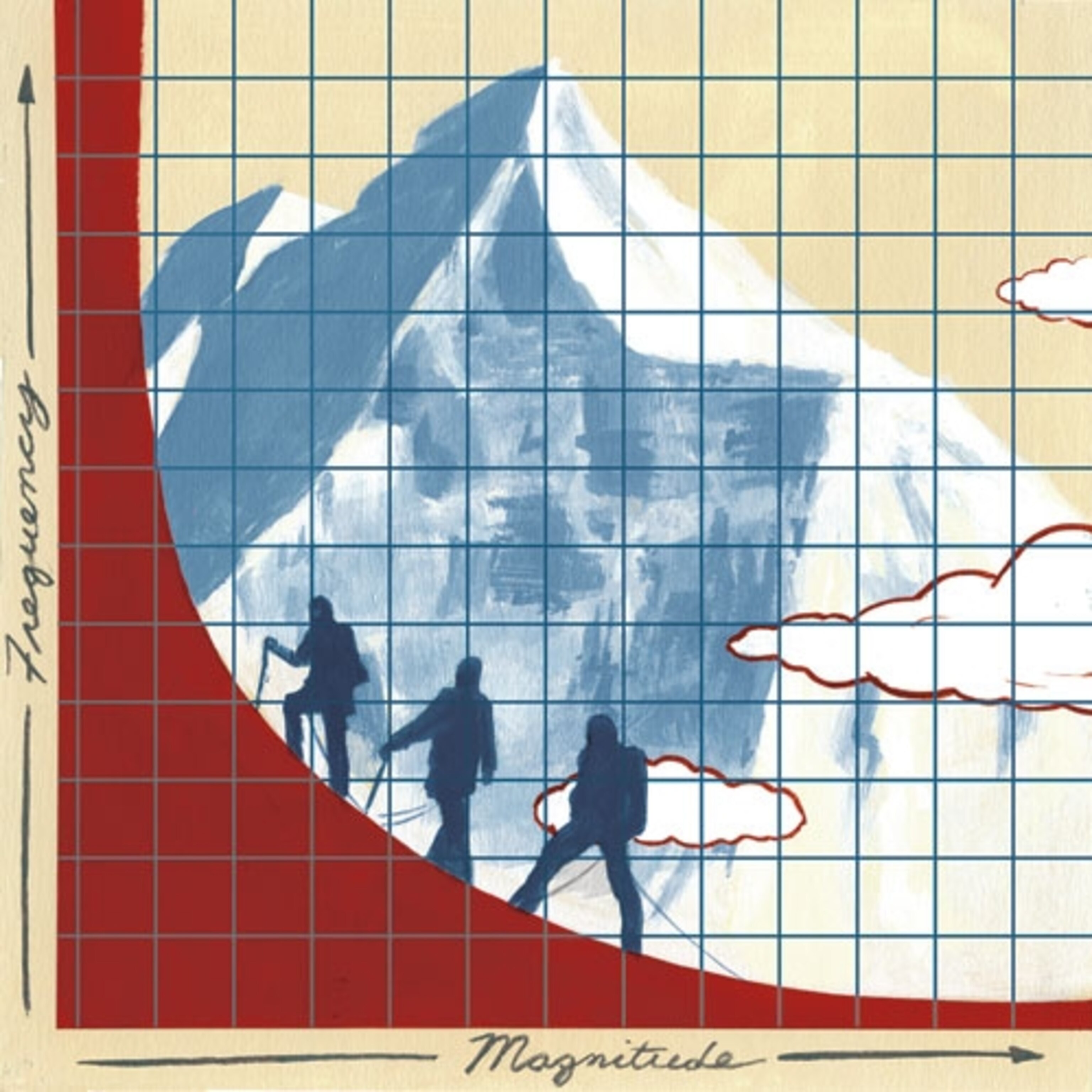

Many kinds of complex systems exhibit this same type of behavior. There are earthquakes of all sizes, for example, but there are hundreds every day that are all but undetectable without sensitive instruments. If you graph these events, they produce what’s known as a long tail or a fat tail, depending on which end of the graph you prefer (see illustration on previous page). As the magnitude of the event goes up, the frequency goes down. All the numerous small events are on the left, while the bigger events dwindle in frequency to the right.

Climbers and rescuers and all their equipment come together to form complex systems around popular mountains. There are numerous small accidents and a few rare catastrophes, characterized by unintended effects that tend to grow larger rather than settling down. For example, once trouble arises, members of a climbing team may do unexpected things. Analysts thought that Miksits may have become hypothermic and Hiemstra may have attempted to descend to get help, falling and breaking his neck in the process. That put them out of radio contact. That, in turn, mobilized rescuers. Any time rescuers head for the mountain, especially with equipment like helicopters, the system becomes more complex and tightly coupled, making the likelihood of additional mistakes rise, not fall. The discovery of Hiemstra’s body gave an urgency to the search that may have led the National Guard pilots to take more chances in order to find Miksits, leading to the crash of the helicopter. The crash, in turn, mobilized more rescuers and more helicopters. And so the system grew and became more tightly coupled and more complex. No one designs such systems and their interactions. They arise naturally.

Complex self-organizing systems also depend on processing something. There is a flow through the system. In the case of the stock market, the flow is money. In the case of a mountain populated by climbers and watched by rescuers, the flow is the energy of the climbers (and later, the energy of their rescuers). As we climb, the chemical energy we burn—from food we eat—is converted into the energy of our position on the mountain and the heat required to keep our body temperature constant. The important task is to release energy in a controlled rather than an uncontrolled way. If you fall, much of your potential energy will be released very quickly. Bad idea. If you get caught in a storm, all your body heat may be released too fast for you to replace it.

If you decide to couple yourself to such a self-organizing system, there are a few things that are useful to know. The first is that it is impossible to prevent accidents in such a system as a whole. As Perrow points out, once a system reaches a critical level of complexity, there is no clear cause for the accidents. They become an inherent characteristic of the system. In that regard, it is inevitable that a system as complex as airline travel, for example, will be beset by many small events (a blown tire or a failed toilet) and the occasional large crash in which everyone is killed.

Of course, attention to safety is im

portant. It’s certainly possible to increase the rate at which accidents occur if enough people behave irresponsibly, or to reduce it if everybody is alert. But you won’t change the general nature of the fat tail curve. Accidents of all sizes will continue to occur, whether on mountains, in air travel, or on Wall Street.

The recent meltdown of worldwide credit markets is a classic systems accident, involving many tightly linked components, lots of energy, and no single cause. In this case, millions of home buyers (and speculators) bought houses they couldn’t afford by taking loans they couldn’t easily pay back. Their lenders, instead of monitoring those loans carefully, were able to sell them off en masse as securities to third parties, such as hedge funds, who used borrowed money (“leverage”) to buy them. This multiplied the risk and increased the monetary “energy” in the system. Along the way, traditional forms of protection—such as loan documentation for borrowers and capital requirements for financial institutions, the kinds of things that could prevent small problems from turning into major ones—had been lessened or removed. When people started defaulting on their loans in unprecedented numbers, the problem spread quickly and unstoppably, like a mountain climber falling down a steep hill without crampons, an ice ax, ropes, or partners who could help.

Changing the frame through which we view the world and our actions in it can lead us to temper our behavior. We can train ourselves to be more alert and prepare for the possibility that the systems we engage in may turn on us. If Miksits and Hiemstra had noticed that they were part of a self-organizing system and that, in whiteout conditions, it was in a critical state, they might have waited out the storm. Home buyers and lenders could have done the same, realizing that they were taking on a significant amount of risk with very little safety net. It might not have prevented the financial meltdown, but it would have taken many out of harm’s way. Although it’s mathematically certain that these accidents will happen wherever we put together a complex system, they don’t have to happen to you.

Go Further

Animals

- This ‘saber-toothed’ salmon wasn’t quite what we thoughtThis ‘saber-toothed’ salmon wasn’t quite what we thought

- Why this rhino-zebra friendship makes perfect senseWhy this rhino-zebra friendship makes perfect sense

- When did bioluminescence evolve? It’s older than we thought.When did bioluminescence evolve? It’s older than we thought.

- Soy, skim … spider. Are any of these technically milk?Soy, skim … spider. Are any of these technically milk?

- This pristine piece of the Amazon shows nature’s resilienceThis pristine piece of the Amazon shows nature’s resilience

Environment

- This pristine piece of the Amazon shows nature’s resilienceThis pristine piece of the Amazon shows nature’s resilience

- Listen to 30 years of climate change transformed into haunting musicListen to 30 years of climate change transformed into haunting music

- This ancient society tried to stop El Niño—with child sacrificeThis ancient society tried to stop El Niño—with child sacrifice

- U.S. plans to clean its drinking water. What does that mean?U.S. plans to clean its drinking water. What does that mean?

History & Culture

- Séances at the White House? Why these first ladies turned to the occultSéances at the White House? Why these first ladies turned to the occult

- Gambling is everywhere now. When is that a problem?Gambling is everywhere now. When is that a problem?

- Beauty is pain—at least it was in 17th-century SpainBeauty is pain—at least it was in 17th-century Spain

- The real spies who inspired ‘The Ministry of Ungentlemanly Warfare’The real spies who inspired ‘The Ministry of Ungentlemanly Warfare’

- Heard of Zoroastrianism? The religion still has fervent followersHeard of Zoroastrianism? The religion still has fervent followers

Science

- Here's how astronomers found one of the rarest phenomenons in spaceHere's how astronomers found one of the rarest phenomenons in space

- Not an extrovert or introvert? There’s a word for that.Not an extrovert or introvert? There’s a word for that.

- NASA has a plan to clean up space junk—but is going green enough?NASA has a plan to clean up space junk—but is going green enough?

- Soy, skim … spider. Are any of these technically milk?Soy, skim … spider. Are any of these technically milk?

- Can aspirin help protect against colorectal cancers?Can aspirin help protect against colorectal cancers?

Travel

- What it's like to hike the Camino del Mayab in MexicoWhat it's like to hike the Camino del Mayab in Mexico

- Is this small English town Yorkshire's culinary capital?Is this small English town Yorkshire's culinary capital?

- Follow in the footsteps of Robin Hood in Sherwood ForestFollow in the footsteps of Robin Hood in Sherwood Forest

- This chef is taking Indian cuisine in a bold new directionThis chef is taking Indian cuisine in a bold new direction